A Conceptionary for Speech & Hearing in the Context of Machines and Experimentation

by

David R. Hill

Copyright (c) David R. Hill 1976, 2001. All rights reserved.

No part of this work may be reproduced or transmitted in any form or by any means, electronic, mechanical,

photocopying, recording or otherwise without prior written permission of the author

Please click here if you do not see a section menu on the left side, or if this multiframe page is inside someone else's frame

Introduction

A conceptionary, like a dictionary, is useful in learning the meanings of words. Unlike a dictionary, it is designed to make the reader work a little, and to develop associations and a conceptual framework for the subject of the conceptionary. It is not an encyclopaedia, though it has as one function, in new or inter-disciplinary areas, the drawing together and reconciliation of disparate sources. Like Marmite (a yeast extract), it is designed to be nutritious and highly concentrated. Do not be put off by the consequent strong flavour. The aim is to achieve broad coverage of material from areas such as psychology and physics, as well as from speech recognition and synthesis, because such material is highly relevant to speech researchers and often difficult to track down. Historical material is included for the same reasons. The view of the field expressed is a rather personal view. The author wishes to thank Mr. R.L. Jenkins for his careful reading of the manuscript and many useful comments and corrections, while laying sole claim to any errors, ommissions, or lack of clarity that remain.

Note that although URLs that allow access to some of the major speech research centres are included within this document, many more centres exist. A search using the google search facility, with the phrase "speech communication research labs" will turn up a large selection of these, but not necessarily including any of those provided below!

Please email any comments, corrections or suggestions to hill@cpsc.ucalgary.ca. While every effort has been made to present accurate information, it is not intended to support safety critical systems. Material should always be cross-checked with other sources.

AH1/AH2

Amplitudes of two kinds of hiss used as part of the excitation arrangements of the Parametric Artificial Talker (PAT -- the original resonance analog speech synthesiser). Hiss 1 is fed into the formant branch of the filter function, and represents aspiration noise, Hiss 2 is fed to a separate filter and represents the noises (such as the /s/ in sun and the /sh/ in shun) produced by forcing air through a narrow constriction towards the front of the oral cavity, and therefore assumed to be relatively unaffected by the usual oral resonances, or formants.

Ax /Ao

Amplitude of voiced energy excitation (often called larynx amplitude) injected into the filter function of a speech synthesiser. Corresponds to voicing effort in natural speech.

absolute refractory period

See nerve cell

acoustic analog

A construct that models the frequency-time-energy pattern of some sound without regard to how it is produced, or the mechanism involved. Such a model is only constrained by the bandwidths and dynamic range inherent in its structure. See also resonance analog, physiological analog and terminal analog.

acoustic correlates

Those acoustic phenomena that correlate (or necessarily co-occur) with articulatory events. If they can be discovered and well defined, they may be used to make inferences about articulation. For example, a burst of noise usually occurs when a blockage in the vocal passages is released, so the detection of a burst of noise is some evidence that the input speech to a recogniser contained a stop sound. Unfortunately, the acoustic correlates of articulatory events are are not well-defined. Speech recognisers require means of normalisation, prediction, extrapolation, and the like.

acoustic domain

Description or existence in terms of the amount of energy present at given times and frequencies. A spectrogram is an acoustic domain description of speech. It is contrasted with time domain descriptions. A time domain description of speech is the time waveform.

acoustic intensity

Is defined as the average power transmitted per unit area in the direction of wave propagation.

I = p(rms)2/r.c watts/square metre where p(rms) is the root-mean-square effective acoustic pressure, r is the density of the medium (air at 20 degrees C and standard pressure gives 1.21 kg/cubic metre), and c the speed of sound in the medium (same air gives c=343 metres/sec).

acoustic intensity level

See sound intensity level

acuity

The power of discrimination in some sensory mode. See difference limen.

afferent

Incoming from the periphery to the central nervous system. Thus afferent nerves are sensory nerves running in from the sensory receptors scattered around an organism.

affricate

A speech sound fromed by the close juxtaposition of a stop and a fricative, in that order. For English, voiceless and voiced examples occur at the start of "chips" and "judge" respectively. The affricate is distinguished from the corresponding stop-fricative combination chiefly by the much shorter burst of friction noise which accompanies the explosive separation of the articulators (thus my chip is different from might ship, a problem in notation solved by introducing a hyphen to distinguish the juncture).

AGC

see automatic gain control

allophone

see phoneme

Ames room

A room of unusual shape, designed by Professor Ames of Princeton University, so that the retinal image of the room from a particular view point coincides exactly with the image that a normal room would produce. People walking about the room appear to shrink and grow as they occupy larger and smaller parts which are further and nearer (respectively), because the brain assumes the room is normally shaped, and therefore misinterprets the changing image size as size change instead of distance change. Intellectual knowledge does not dispel the illusion. Experience of trying to do things inside the room can cause the room to be perceived as it really is. The effect lends itself to dramatic demonstration on film, as it depends on viewing with only one eye. See also the moon illusion.

alveolar ridge

As the hard palate runs towards the top front teeth from behind, a buttress enclosing the roots of the teeth is encountered. This is called the alveolar ridge.

anacrusis

A term borrowed from music. In music, an anacrusis is a series of notes that are not counted towards the total note duration in a bar—they are "extra". Jassem uses the term anacrusis for what Abercrombie and others have called proclitic syllables—syllables at the end of a rhythmic unit in speech that belong, grammatically speaking, with the following rhythmic unit. Jassem chose the term deliberately because, in his theory of British English speech rhythm, the anacruses are not to be counted in the duration of the rhythmic unit (called a footby Abercrombie and others or a rhythm unit by Jassem). See isochrony, foot, proclitic, enclitic.

articulation

The process of placing the tongue, lips, teeth, velum, pharynx and vocal folds in the state or succession of states required for some utterance. Also used for one such state (e.g. "consonant articulation"). There is unconcious ambiguity in just what is meant in normal use (e.g. "He articulated clearly" implying that articulation itself is "sound", rather than a placing of the articulators in some "articulatory posture".. One could easily articulate an isolated consonant, without actually producing sound. Sound only results when excitation energy of some sort is supplied (e.g. by using muscles to expel air from the lungs so that the vocal folds vibrate.

articulation index

A method of estimating speech intelligibility based upon the division of the speech spectrum into 20 bands contributing equally to intelligibility. It is assumed that, for each band, the intelligibility contribution is proportional to the signal to noise ratio in the band, being 100% for s/n greater than or equal to 30 db and zero for s/n less than or equal to 0 db. The original method (French & Steinberg) using 20 bands of equal weight, and of width related to the critical band distribution, has been adapted to 15 1/3 octave bands with appropriately differing weights, which more suited to the measuring apparatus that is easily available. Under some circumstances (e.g. when given directional cues or special instructions, etc.) speech may be intelligible even when masked at an overall signal to noise ratio of -10 or even -15 db. This should not be taken as contradiction of the validity of articulation index calculations.

articulatory synthesis

Speech synthesis based on the use of articulatory constraints. Articulatory constraints may be applied to the generation of parameters for any synthesiser but true articulatory synthesis depends on the articulatory constraints being inherent in the implementation of the synthesis system, as the reproduction of all the details is otherwise very difficult if not impossible. A transmission-line analog emulates the propagation of sound waves in the acoustic tube formed by the vocal tract directly. Thus the full spectrum is produced, with correct energy interaction for nasal sounds, and correct shapes for the formants. True articulatory synthesis is to other approaches to synthesis as true ray tracing is to polygonal modeling in computer graphics. By modeling the physics of reality directly, articulatory synthesis achieves a high degree of fidelity to nature. The problems arise from a need for a great deal of computational power, the need to manage the control problem successfully, and the need for accurate articulatory data. Ideally, the various energy sources should be emulated on the basis pf physics but the only complete articulatory synthesis system currently available (originally a commercial development by Trillium Sound Research for the NeXTSTEP operating system but now under a General Public Licence from the the Free Software Foundation) injects appropriate waveforms at appropriate places in the vocal tract, because of a lack of computational power, as well as lack of suitable models. The research is continuing. See the paper "Real-time articulatory speech-synthesis-by-rules" for more detail.

artificial ear

Not a prosthetic device (yet). A device that simulates the acoustics of the head and the ear cavity of a human being, measuring (by means of a calibrated transducer) the pressure that would occur at the ear-drum of a real ear. It is used in determining the total effect of earphones plus cushions on the fidelity of reproduction of speech without resorting to sophisticated, highly trained (i.e. expensive) real listeners for subjective testing. The latter is a far more reliable method of evaluation. See pinna.

artificial larynx

A device used for prosthesis in laryngectomized persons. It is held against the throat and, when activated by a button, it injects vibratory energy into the vocal tract as a substitute for the lost voice capability. It is not too difficult to use, but less convenient than oesophagal speech.

artificial mouth

Not a prosthetic device (yet). A model designed to simulate the acoustic characteristics of the head and mouth upon the radiated sound. Used, for example, in evaluating microphones.

ARU

See audio response unit.

aspiration

The escape of breath through a relatively unconstricted vocal tract, without accompanying vibration of the vocal folds. Some turbulent or friction noise is generated but it is not clear whether this is due to turbulence at the glottis or along the walls of the vocal tract, in general.

ASR

See automatic speech recognition

audibility

The degree to which an acoustic signal can be detected by a listener. See also intelligibility.

audio response unit

A peripheral device attached to a computer to allow voice messages to be sent to users connected by telephone. A Touch-Tone phone is used in most current applications to provide input. The ARU may replay direct recordings, or compressed recordings, or it may synthesise the speech using rules based on general speech knowledge. The commonest current method uses compressed recordings based on Linear Predictive Codingg (LPC) parameters.

audiogram

See audiometry

audiometry

The process of determining the frequency distribution of a subject's absolute threshold of hearing (in db, compared to "normal" threshold). Pure tone stimuli at selected frequencies are used to sample this distribution by presenting them at various intensities and noting response/no-response from the patient. Quiet conditions (noise not greater than 10 db below threshold of hearing in the booth), noise shielded earphones, and special instruments are used. Each ear is evaluated separately. The plot of threshold against frequency for the two ears is called an audiogram, and shows threshold in db (referenced the normal threshold) as a function of frequency.

audiometer

An instrument calibrated to produce tones and other sounds at known intensities. It is designed to produce audiograms for subjects whose hearing is to be tested.

auditory cortex

That part of the cortex (the convoluted outer layer of the brain, representing the highest degree of evolution) that is primarily responsible for processing auditory signals (i.e. those nerve impulses originating from the ear—specifically from the basilar membrane).

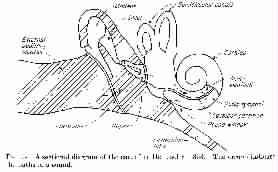

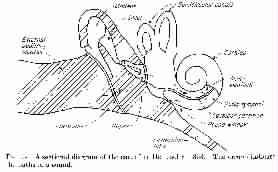

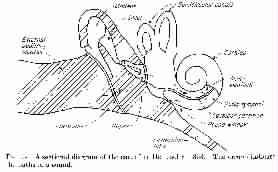

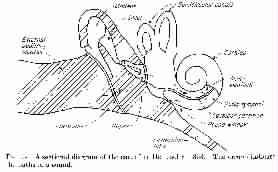

auditory meatus

In full, the external auditory meatus. The canal connecting the eardrum to the outside air. The external opening of the meatus is within the area of the pinna. (The pinnae are the things we are talking about when we say "My ears are cold"). The meatus is slightly curved (which prevents the ear-drum being viewed without instruments - e.g. an otoscope); it is about 3 cm long and about 1 cm or less in diameter. Hairs and wax glands protect against ingress of insects etc.Excess wax can cause some loss of hearing. See also pinna.

auditory nerve

See modiolus

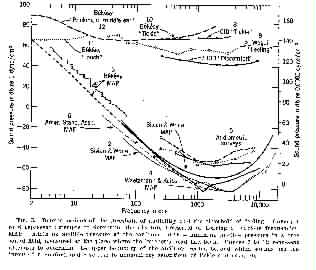

auditory pathways

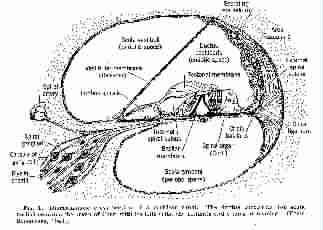

The paths traced by the nerves leading from the organ of Corti in the cochlea to the auditory cortex.

In logical order, the significant staging posts are: organ of Corti: Corti ganglion: ventral and dorsal cochlear nucleii: superior olive: inferior colliculus: medial geniculate: and finally auditory cortex.

The signals are fed roughly equally to both halves of the brain (cross-overs occuring at the level of medulla and the path between there and the inferior colliculus): also side branches occur in the neighbourhood of the medulla (the medulla embraces the trapezoid body, the dorsal and ventral cochlear nucleii, and the two superior olives), at the lateral lemniscate and nucleus, and at the cerebellar vermis. The dorsal cochlear nucleus sends all its outgoing processes to the contralateral side of the brain, though there are connections back in the inferior colliculus region. Based on their neurophysiological studies of cats, Whitfield and Evans (Keele University, U.K.) have stated that the primary function of the auditory cortex is the analysis of time pattern rather than stimulus frequency.

Afferent acoustic pathways

From Handbook of Experimental Psychology,

S.S. Stevens (ed.). © 1951 John Wiley & Sons.

Used with permission of John Wiley & Sons.

automatic gain control

A device often included in electronic audio processing systems to limit the amplitude of the signal without actually clipping it. A long-term average of the speech energy is taken and used to control the gain of the amplifier to the system, turning the gain down as the input speech energy increases. Thus signal levels may be kept near optimum levels without overload. If required, the control signal may be transmitted along with the compressed signal to allow re-expansion to the original dynamic range. Such a device is called a compander (naturally). Compression involves distortion as well. The time constants involved in deriving the control signal are important. Faster action is more likely to prevent overload, but produces greater distortion. Longer time constants may be used where the average signal is not expected to vary quickly.

automatic speech recognition

The process of automatically (i.e., by machine) rendering spoken sounds as correct language symbols. It has been contrasted with automatic speech understanding which means the production of a correct response by a machine for given verbal imput. The problem of recognising unlimited vocabulary for arbitrary speakers in continuous speech remains an unsolved goal. Most current systems require some degree of training, recognise relatively restricted vocabularies, and only deal with isolated words or phrases (IWR). Continuous Speech Recognition (CSR) is necessary for normal speech as there are no breaks or boundaries between words in normal utterances, unless a pause occurs. It is quite difficult to speak in such a way that a pause is inserted before every word.

Ax

The amplitude of glottal excitation supplied to a synthesis model (the "larynx amplitude").

axon

The output process of a nerve cell (a process is an outgrown limb or filament).

bandwidth

That contiguous band of frequencies that may be transmitted through some signal processing system with no more than 3 db loss of power (i.e., no frequency in the band is attenuated to less than half the original power). A signal is often loosely ascribed a "bandwidth". This really refers to the bandwidth of the processing system that would be needed to process the signal without introducing unacceptable distortion or loss of significant components. For complete fidelity, the bandwidth of the processing system should at least equal the frequency spectrum range of the signal's significant components.

bar

In the sense of "unit of measurement" a bar is the pressure exerted by the "standard atmosphere". Unfortunately, as with early temperature scales, a slight miscalculation has meant that the standard atmosphere accepted today actually exerts a pressure of 1029 millibars (1.029 bars). In modern terms: 1 bar = 0.1 MPa (MegaPascals) where a Pascal is 1 nt/m2 (Newton per Square Metre), and is the standard SI unit of pressure.

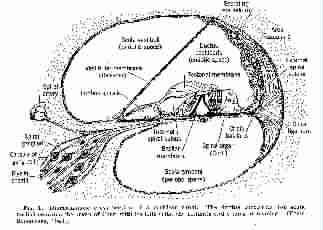

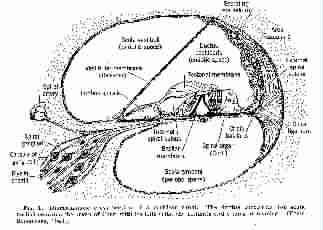

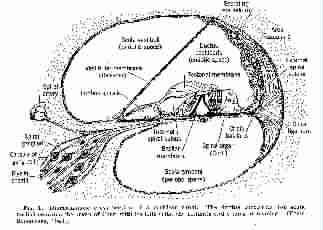

basilar membrane

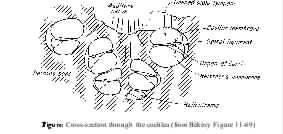

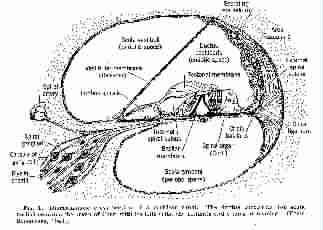

The cochlea, or organ of hearing, is a spiral chamber. It is divided, longitudinally, into two thinner tubes (the scala vestibuli and scala tympani) by the cochlear partition. The cochlear partition is itself a duct, triangular in cross section, bounded on the shortest side by the outer wall of the cochlear wall, by Reissner's membrane, and -- on the third side -- a combination of a bony shelf and the basilar membrane. The bony shelf is cantilevered out from the modiolus, or centre-post, of the spiral chamber formed by the cochlea. The bony shelf starts wide near the oval and round windows at one end of the cochlear spiral, and narrows down towards the helicotrema, an opening at the other end of the cochlea's spiral tube that joins the two perilymph-filled scala. Thus the basilar membrane starts off narrow by the windows, and becomes progressively wider towards the helicotrema, since the tuve forming the cochlea is fairly constant in diameter. The basilar membrane is about 3.5 cm long and varies in width from 0.1 mm at the window end to 0.5 mm at the helicotrema end, making about 2.5 turns in the spiral. The scala are about 2 mm in diameter throughout. Bekesy remarks sanguinely that "it is difficult to carry out experiments with dimensions as small as these." The basilar memberane bears, on its inner surface, the organ of Corti, which activates the sensitive hair cells and hence generates nerve pulses to the brain. See also, tectorial membrane and membranous labyrinth.

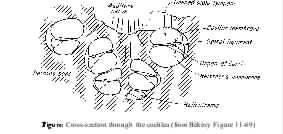

Cross-section of cochlear canal.

From Handbook of Experimental Psychology, edited by S.S. Stevens.

© 1951 John Wiley & Sons. Used with permission of John Wiley & Sons.

BBN

Bolt, Beranek, and Newman. An important company in speech, linguistics, and computers. Beranek, one of the founders, is prominent in the early acoustics research literature.

Bell Telephone Laboratories

Bell Telephone Laboratories, better known as Bell Labs, is perhaps the best known research establishment in the world with leading edge research in a mind-boggling number of areas, originally funded by profits from telephone network operation. Formed in 1907 by the combination of AT&T and Western Electric Engineering Departments, but not named till 1925. A consent decree split AT&T in 1956, but Bell Labs continued as one of the world's top two or three speech and communication research laboratories. In 1996, AT&T underwent another major re-organisation and splitting to meet changing world market conditions. Bell Laboratories now forms part of Lucent Technologies. See the relevant Lucent Technologies history page on their web site. A web site specific to Bell Labs is also available. Unix was invented there by Dennis Ritchie and Ken Thompson; James Flanagan, of speech research fame, was there; Claude Shannon, of information theory fame, was there. The sound spectrograph was invented there by H.K. Dunn. The first work by Homer Dudley and his colleagues on vocoders and speech communication was carried out there. The list goes on. The Bell Systems Technical Journal provides a major collection of papers on their research.

beats

When two pure tones fairly close together in frequency are sounded together, a listener hears the intensity waxing and waning at a rate that reflects the difference between the two tones precisely. The effect is due to the successive cancellation and reinforcement of one tone by the other as they get in and out of step (in and out of phase). Over part of the range where difference effects occur, many listeners hear a third tone of the appropriate pitch (i.e. the difference frequency).

bilabial

Of, or with, two lips (from the Latin).

binary digit

A representation of two possible states ("1" and "0") which may form part of the representation of a number in base 2 arithmetic. See also bit.

binaural

Pertaining to two ears. See diotic and dichotic.

bit

Strictly speaking, a unit of information. In common parlance, a bit is a binary digit, which is a physical signal (typically in a computer memory, register, etc.). It has been suggested that since the term bit (binary digit) is used in information theory as a fundamental unit of information measure, the term binit (binary digit) should be used for the physical signal. The reason: although a binit can theoretically transmit one bit of information, it can only do so under noiseless (and hence impossibly perfect) conditions. See information and redundancy.

black box

A device whose detailed internal construction is unknown, but which has some (usually desired) input-output relationship. It originated during the second world war, due to the practice of painting aircraft electronic boxes black and ensuring that the insides were secret.

bone conduction

The path of sound transmitted into the cochlea by vibration of the surrounding bone, however vibration of the bone is produced.

bony labyrinth

The passages inside the temporal bone that contain the mechanical parts associated with hearing and balance.

see membranous labyrinth and cochlea

breathy voice

During phonation, the vocal folds vibrate from relatively open to relatively or completely closed. If the folds are not completely closed, air will pass between the folds and inject random energy into the vocal tract, which adds noise or "breathiness" to the overall voicing energy. The small gap that remains during closure is called a "glottal chink", and is characteristic of many female vocalisations.

BSTJ

The Bell System Technical Journal. An important research journal published by the Bell Labs. Volume 57, Number 6, Part 2 for July-August 1978 was the original technical publication on Unix, describing in a collection of papers work which started in 1969 when Ken Thompson started working on a cast-off PDP-7 computer and Unix was conceived. Shannon's original work on information theory was first published there. An important source of original work on speech communication and procesing. See Bell Telephone Laboratories.

buzz/hiss switching

That part of a speech bandwidth compression system concerned with detecting and implementing the change in excitation corresponding to the contrast between voicing and fricative noise. In its simplest form a circuit is provided that detects voicing (not an easy or entirely solved problem) and switches the excitation of the synthesiser part to "buzz" (voiced excitation) or "hiss" (random excitation) accordingly. Of course, such a simple system does not produce correct voiced fricatives. See voicing detection.

capacity

The rate at which information (bits) may be transmitted over a defined channel. See also bit.

CCRMA

Centre for Computer Research on Music and Acoustics, at Stanford University, original home of Perry Cook, Julius Smith and others. Perry Cook is now at Princeton University.

cells of Claudius

Cells capable of generating D-C potentials and lying on the basilar membrane beyond the outer edge of the organ of Corti.

centre clipping

see clipped speech.

cepstral analysis

If the logarithm of the spectral amplitudes produced by a Fourier analysis of a time series is, itself, treated as a time series (sic) and subjected to a second Fourier analysis, the envelope is broken up into the underlying fine structure (pitch-, or excitation-determined in the case of voiced speech) and broad envelope characteristics (formant-, or vocal-tract-filter-determined in the case of speech). The terms of the new Fourier series are now called rahmonics (instead of harmonics), and the distribution of rahmonics is called a cepstrum which displays the signal in the quefrency domain. Such analysis is very attractive for speech since, in theory, and to a large extent in practice, one can take the cepstrum, remove some component and then carry out an inverse transform back into the frequency domain. Thus, for example, one can remove the effect of the fine structure on the spectrum and leave only the formant envelope. At the same time, the presence of voicing, and its frequency, may be determined from the component removed.

cepstrum

see cepstral analysis.

cerebellar vermis

see auditory pathways.

channel vocoder

see vocoder.

clipped speech

a speech waveform makes amplitude excursions about some zero level as time progresses. Removal of some of these in various amplitude bands is called "clipping." Peak clipping refers to removal of the largest positive and negative amplitude bands (leaving flattened peaks and troughs not exceeding the largest bands remaining). Centre clipping involves the removal of the centre bands of amplitude. The following:

Peak and centre clipped speech

illustrates these concepts. The amount of clipping is expressed in terms of the number of decibels by which the maximum amplitude is reduced:

clipping = 20 log (A0/A) db,

where the reference is A (the original amplitude) and Ao (the clipped amplitude), but the number of decibels is kept positive by inverting the ratio to keep it greater than 1. Clipping need not always be symmetric about the zero axis, though it usually is. It introduces distortion which may or may not be audible.

coarticulation

The effect of of speech sounds that are fairly close together upon each other. The vowel at the beginning of "abbey" is not the same as the vowel at the beginning of "abu", because coarticulation works backwards as well as forwards, and affects more than just the immediate neighbours. It is due at least in part to the anticipation of articulations to come, and the effects of articulations that are past upon the current posture, and hence upon the sounds that are produced at any given moment when speaking.

cochlea

That part of the bony labyrinth concerned with transducing mechanical pressure waves into nerve signals. The active part is the basilar membrane and associated structures. Its name comes from the Latin meaning "snail" which is what the cochlea spiral resembles. The whole structure is deeply embedded in the temporal bone and exceedingly difficult to work on, either surgically or experimentally. Georg von Bekesy obtained a Nobel prize for his work on hearing, which involved exceedingly delicate dissection, and study of the cochlea and other related structures, by careful ingenious experiments. The space inside the cochlear spiral is a fairly uniform tube of total diameter about 5 mm, 35 mm long, wound around the modiolus (or centre-post) in a decreasing spiral, from the start near the round and oval windows to the end where is found the helicotrema, making roughly 2 1/2 turns. This space is divided longitudinally into two (the scala vestibuli and the scala tympani) by the cochlear partition—itself a fluid filled duct containing among other things the organ of Corti. The cochlear partition is filled with endolymph, while the scala are filled with perilymph, and are joined by the helicotrema. Sound pressure waves are transmitted into the scala vestibuli by the stapes acting on the oval window. The complex hydrodynamic and elastic behaviour of the fluids and structures as the pressure waves affect the fluids on both sides of the cochlear partition, lead to frequency discriminating nerve signals being generated in monotonic progression from low to high frequency along the basilar membrane by the relative movement of the tectorial membrane and the hair cells. The nerve pulses at any given frequency below about 3000 hz tend to occur in phase with the input sound pressure waveform, which is the basis of the "VolleyTheory" of pitch perception.

Cross-section of cochlea canal

(From Handbook of Experimental Psychology, edited by S.S. Stevens.

© 1951 John Wiley & Sons. Used with permission of John Wiley & Sons)

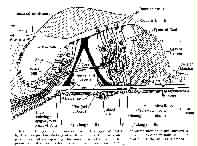

Cross-section of Organ of Corti

(From Handbook of Experimental Psychology, edited by S.S. Stevens.

© 1951 John Wiley & Sons. Used with permission of John Wiley & Sons)

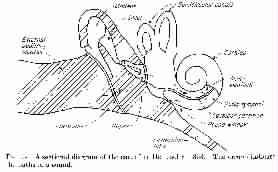

Diagram of ear components

(From Experiments in Hearing, by Georg von Békésy.

© 1960 McGraw-Hill Book Company. Used with permission of McGraw-Hill Book Company.)

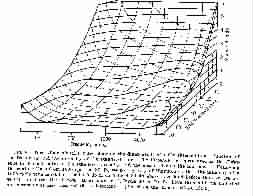

Figure 6: Cross-section of cochlea.

(From Experiments in Hearing, by Georg von Békésy.

© 1960 McGraw-Hill Book Company. Used with permission of McGraw-Hill Book Company.)

cochlear duct

The space inside the cochlear partition. It is filled with fluid called endolymph. See scala media.

cochlea microphonics

There are first order and second order cochlear microphonics which, physically, are varying electric potentials recorded from the perilymph usually at the round window. The first order microphonics have a form very like the pressure waveform of the input sound. They are believed to have no role in hearing to originate from the organ of Corti. The second order microphonics are associated with the operation of the hair cells which also generate the nerve pulses on which auditory sensation actually depends.

cochlear nucleus

see auditory pathways.

cochlear partition

see cochlea.

cocktail party problem

Idiomatic name (derived by analogy) for the problem faced when speech is masked by other speech—the problem, in fact, faced by conversationalists at cocktail parties! Humans have unexplained abilities in accomplishing this task, though some cues (common pitch structure, signal level, directional cues, lip reading cues, semantic context, voice quality) all undoubtedly play a part. Listening to someone at a cocktail party, and listening to a recording taken of the same conversation are quite different tasks, the latter being much more difficult.

coding

The process of mapping one set of symbols onto another. Coding may be carried out in order to obtain information security (encryption), to combat noise (by building in noise-resisting redundancy), or to maximise the rate of information transmission in a communication channel (by matching the characteristics of the source of information to the characteristics of a channel. Morse code, invented by Samuel Morse long before Shannon derived his important results on the subject, provides an excellent match between English letter frequencies and a binary channel.

compression

A term applied to the process of removing redundancy from collections of information. Such collections contain more physical data than are necessary to represent the information content (information in the sense developed by Shannon). Reducing the physical data to the absolute minimum needed to represent the information results in maximum compression. It has been estimated that the change between typical successive frames of video information can be represented by less than two bits.

conductive deafness

Deafness due to some defect in transmitting sound pressure waves from the air to the oval window. See also nerve deafness.

confusion matrix

A square matrix having rows (say) corresponding to stimuli and columns (say) to responses, showing, in each cell, a number which represents the probability of confusion, or number of confusions in a given test, occurring between the various stimulus choices when making responses, during a psychophysical experiment. For example, a number of words may be presented to subjects under some condition of noise or distortion and listeners responses recorded. Mistakes will be made, and the pattern of confusion can be preserved as a confusion matrix. In using such data it is vital to distinguish significant confusions from those due to chance. The conditions of such an experiment are such as to cause mistaken responses. It is only the systematic mistakes that are of interest. Mistakes that are not due to some systematic effect will be distributed among possible responses on a purely chance basis. The usual statistical techniques may be used to set levels of significance and detect systematic confusions that represent systematic effects, thereby increasing our understanding of speech perception or production.

connotation

The associative or indirect meaning, as opposed to the direct meaning—implying the attributes while denoting the subject. Intensive as opposed to extensive indication of some set. See also denotation.

consonant

As opposed to vowel. A stricter dichotomy applies between related contoids and vocoids. Consonants (contoids) are distinguished from vowels (vocoids) by a higher degree of constriction in the vocal tract.

context

The surroundings or co-occurring circumstances of an entity or situation. In language, a phoneme occurs in the context of other phonemes, during utterances, and the phonetic context (among other things) determines the particular allophone that occurs. Allophones are different acoustic variations of the basic (abstract) phoneme category. At a higher level, a word occurs in the context of other words. If the word is not clearly heard, there will very likely be enough contextual redundancy to allow the word to be recognised by inference.

contour spectrogram

In a conventional spectrogram, the amount of energy at a given frequency and time is represented as grey-scale values which are not easily quantified. The apparatus may be modified by including a circuit which generates pips each time the marker output crosses one of a set of internally generated reference levels. In the final analysis these extra dots join up to form contours of energy on the output just like the contours marking the height of the ground on a map. The grey scale marking is still generated and the result is a highly readable, quantifiable energy display.

contralateral

The opposite side.

coronal section

A section through an organism orthogonal to the axis running from head to tail.

cortex

The outer layer or portion of an organ—especially the outer layer of the brain, which is newest in evolutionary terms.

Corti ganglion

see auditory pathways.

cps

Cycles per second. Now termed herz, abbreviated hz.

creaky voice

See glottal flap.

critical band

If a pure tone is just masked by white noise covering the entire audio spectrum, it is found that the bandwidth of the noise may be progressively reduced, without affecting the masking, until it covers a band around the frequency of the pure tone of a certain critical width. Further reduction of the white noise band, either raising the lower frequency limit, or lowering the upper limit, causes a tone that was just masked before to become audible. This band is called the critical band. It is thought (Broadbent, for example) that it may correspond to some kind of neurological "catchment area" on the basilar membrane. The width is around 50 hz at 100 hz, and increases to 1000 hz at 10,000hz. Between 50 hz and 2,000 hz the critical band remains between 50 and 100 hz and then rises roughly as the logarithm of frequency. Components of the noise outside the critical band do not contribute to the masking of the tone. (This fact should not be allowed to confuse the issue when a tone is masked by noise outside the band (e.g., a high frequency tone may be masked by a low frequency tone with around a 40 db threshold shift).

cross-talk

Leakage of unwanted signals into a communication path from adjacent paths. An extreme case occurs at cocktail parties, as far as speech is concerned, but (for example) cross-talk between telephone lines causes problems (the term "line" here includes the exchange).

CSR

Continuous Speech Recognition. Automatic Speech Recognition in which the speaker speaks naturally, rather than trying to pause between words.

cybernetics

A term originated and defined by Norbert Weiner as "the science of control and communication in animals and machines". The term has been much degraded since then (there is a book entitled "The Psycho-Cybernetics of Sex" for instance), but it is still useful, with care.

cycles per second

A term replaced by herz (hz). The term is self-explanatory allowing that a "cycle" is some repeating progression of entities, operations or values.

cyton

The body of a nerve cell as opposed to its various processes.

damage risk

In relation to hearing this refers to the likelihood that a given noise exposure will result in permanent hearing loss (NIPTS—noise induced permanent threshold shift). The subject is complicated since exposure history, noise spectrum and other characteristics all contribute. The likelihood of NIPTS is usually inferred from TTS (temporary threshold shift) data measured in the laboratory. In general, a noise that produces no TTS will produce no NIPTS, if exposure is limited to no more than eight hours per day, with a 16 hour rest period between exposures.

db

See decibel.

dbm

A decibel scale based on a reference value of 1 milliwatt in 600 ohms and used by broadcast engineers. The equivalent voltage across a 600 ohm register is 0.775 volts-rms. A steady tone is required for calibration. Peak program meters habased on this reference, but calibration marks are not in dbm directly. See rms

decibel

A dimensionless unit of power (energy) measurement, abbreviated to db, which expresses power as a ratio between the target power and some defined reference: thus, for some measured power W, db re. Wo = 10 log (W/Wo) (taking logs to the base 10).

The constant "10" is a multiplier to convert the "bels" resulting from the application of the basic formula into decibels (1 bel equals 10 decibels). The reference (in this case Wo) must always be stated. The standard for speech and noise is approximately 10-12 watts/m2. The reference or base is very often given as a pressure, rather than a power. Thus the base for speech and noise in terms of pressure is 0.0002 dynes/cm or 2 x 10-5 nt/m2, but translating this into power (energy) requires a knowledge of the air characteristics prevailing at the time. This emphasises that any db comparisons based on pressures must be transmitted in a medium of identical acoustic impedance. Under these assumptions, power being proportional to the square of pressure, the formula becomes:

db re po = 10 log (p2/po2) = 20 log (p/po).

Being logarithmic, the measure is well suited to quantifying the large range of intensities encountered in acoustic measurement.

DECtalk

The most popular and successful text-to-speech system over the last few decades, based on work by Jonathan Allen, Dennis Klatt, their colleagues and their students on MITalk at MIT. The system uses a formant synthesiser, togther with a dictionary, letter-to-sound rules and some grammatical analysis to convert ordinary text into spoken words. There are a number of look-alikes and derivatives. The work is well described in the book by Allen, Hunnicutt and Klatt: From text to speech: the MITalk system, published in 1987 by Cambridge University Press.

dendrite(s)

The input process(es) of a nerve cell.

denotation

The meaning of something by direct example. Extensive as opposed to intensive definition. See also connotation.

dental

To do with teeth. A dental fricative would involve the teeth forming part of the constriction in the vocal tract that produced the fricative noise.

dichotic

See monotic.

difference limen

Limen is the Latin for threshold (plural limina). The difference limen is that difference or change in the stimulus that is at the threshold of detectability (since it varies from moment to moment in a random manner, it is normally defined as the change or difference that will be detected in 50% of trials). It is abbreviated to DL and is the same as the Just Noticeable Difference, or JND. The Reiz Limen or RL is the absolute threshold of detectability, below which the stimulus is not noticed at all in 50 per cent of trials. The TL or Terminal Limen is that limit beyond which the stimulus elicits pain. The DL is not constant for a given dimension of stimulus, as might be supposed. If we let DI stand for the DL at a given level of stimulus, then to a very close approximation:

DI/I = K (a constant) (Weber's Law)

where I is the intensity of the stimulus in the dimension concerned. In words "a stimulus must be increased by some fixed percentage of its current value for the difference to be noticeable". At 50 grams a 1 gram increment in a weight might just be noticed. At 100 grams, approaching 2 grams would be necessary.

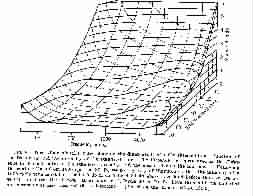

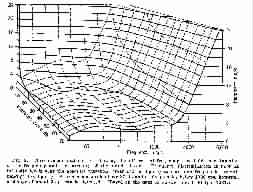

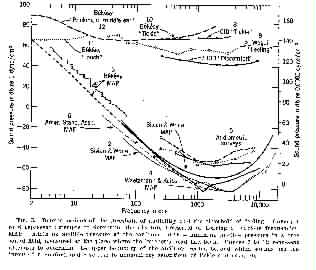

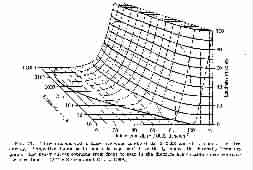

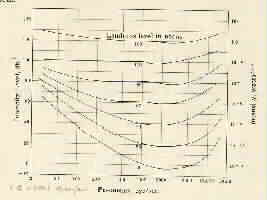

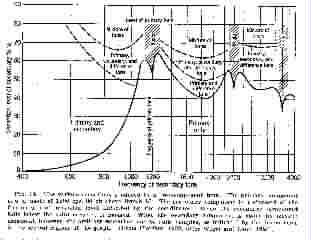

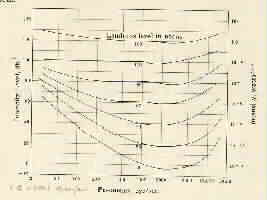

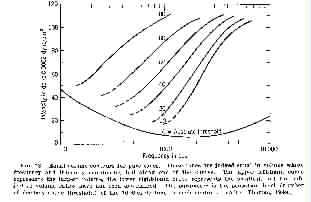

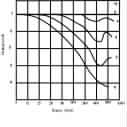

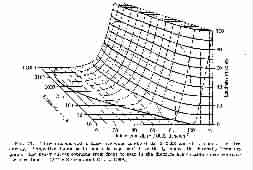

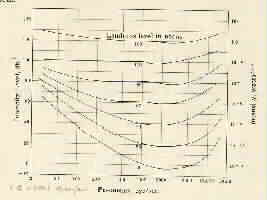

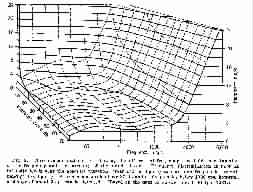

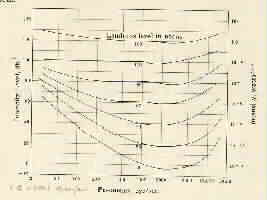

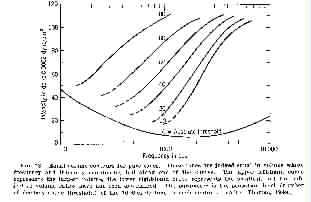

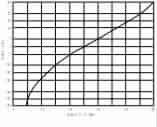

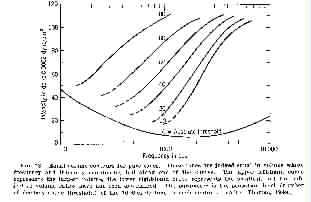

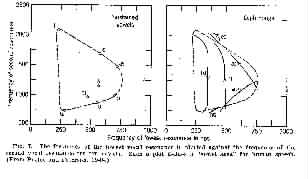

The following two figures show how the intensity and frequency difference thresholds vary with frequency and intensity of sounds. Note that Weber's Law is effectively incorporated into a measure of the DL based on decibels, and does not hold exactly for speech frequncy and intensity. See also method of average error, method of limits and method of constant stimuli

Differential intensity threshold versus frequency and intensity

(From Handbook of Experimental Psychology, edited by S.S. Stevens.

© 1951 John Wiley & Sons. Used with permission of John Wiley & Sons)

Differential frequency threshold versus frequency and intensity

(From Handbook of Experimental Psychology, edited by S.S. Stevens.

© 1951 John Wiley & Sons. Used with permission of John Wiley & Sons)

digram/digraph

Two letters appearing together, in order. A study of digram frequencies may be of value in automatic language processing, especially coding and compression.

dimensionless

Physical units and quantities are normally associated with dimensions of mass (M), length (L) and time (T). Thus acceleration has dimensions of L x T-2. Some units, however, are dimensionless since they represent ratios of quantities of the same kinds, or mixtures of dimensions that cancel. Thus any db scale value is dimensionless, and a pure number, being a ratio of two quantities of the same type.

diotic

See monotic.

diphone

A combination of two phones representing the instantiation of two successive speech postures. Since the interaction effects between the postures concerned are explicitly represented in diphone segments it is attractive as a basis both for synthesis and recognition on the theory that segmentation is carried out at places of minimum rate of spectral change, leading to a reliable algorithm for recognition, and simple assembly for synthesis. The success (or lack of it) of the approach reflects the validity of the underlying assumptions which are largely unstated. Thus synthesis based on assembling synthetically generated diphones, which have been carefully evaluated by relays of naive listeners, is relatively successful. Recognition has enjoyed less success, because appropriate segmentation is uncertain and difficult. Many "diphone" segments really comprise more than two segments because consonant clusters, for example, do not "obey the (unstated) rules". Whilst, in principle, around 1600 diphones could represent all the posture combinations in English, in practice, at least 3000 are required, and adding more offers much scope for improvement because coarticulation effects range over more than just two neighbouring speech sounds, so that at least 404 combinations of "tetraphones" (more than 2.5 million) might be considered necessary to account for all the phonetic level interactions, even ignoring other influences.

diphthong

A sound formed by time-sequential combination of two simple vowel articulations. Diphthongs are frequently regarded as phonemes in their own right. This is almost inevitable in view of the classical definition of phoneme, but is not too helpful for simple synthesis and recognition by machine. Consonant clusters are not regarded as different phonemes (except for affricates), so why should vowel clusters be regarded as separate phonemes (the standard reply refers to their distributional characteristics and, for the affricates, to the problem of juncture). There may be considerable modification of the component sounds compared to their "normal" realisation (reduction and shortening). Glides, which are very close to diphthongs are similarly considered phonemes in their own right, though they may be closely approximated by using segments of the related vowels, again shortened, and with diphthong-rate transitions. However, the glides also represent a much greater obstruction of the oral cavity than the related vowel sounds (which also leads to a somewhat different spectral character) so that they are properly identified as contoids (i.e. loosely speaking as "consonants"), rather than diphthong combinations. See triphthong.

diplacusis

A disparity in pitch perception between the two ears. One common way of inducing diplacusis is by using a loud tone to fatigue one ear. Judgements of pitch in the region of the fatiguing tone may then differ by as much as an octave between the two ears. The condition can exist to some degree, in many subjects, as a natural state. It may explain why unaccompanied folk singers conventionally stuff a finger in one ear whilst singing.

distinctive features

Distinctive features are defined for speech sounds as binary distinctions between polar extremes of a quality, or between presence versus absence of some attribute of the sound. It is an approach to speech segment description based upon Daniel Jones' idea of "minimal distinctions"—any lesser distinction between two sounds being incapable of distinguishing two words clearly. Distinctive features are thus closely related to phonemes and subject to the same limitations. Phonemes may be considered as concurrent bundles of distinctive features. Problems arise with consonants. (Preliminaries to Speech Analysis: the distinctive features and their correlates, by Jakobson, Fant and Halle, MIT Press 1969, is the original reference to this approach).

DL

See difference limen.

DTW

See Dynamic Time Warping.

Dynamic Time Warping

A process for normalising the time relationship between an unknown speech waveform and a reference waveform, carried out by reference to frequency domain data. If the corresponding parts of the varying speech signal for the reference and the unknown can be mapped onto each other, the problem of deciding whether they represent the same speech sounds is made much easier. See also time normalisation.

dyne

A unit of force, being the force required to give one gram of matter (a mass of 1 gram, that is) an acceleration of 1 cm/sec2. It is now obsolete, having been replaced by the newton in the SI system of measurement. One newton gives 1 kg mass acceleration of 1 m/sec2 and is thus 100,000 dynes.

dynes/cm2

A measure of pressure.

1 dyne/square centimetre = 0.1 nt/square metre = 0.1 Pa/square metre = 1 microbar = 0.011 kg/square metre.

Because of the wide range of pressures involved in acoustics, a logarithmic function of power ratios is used -- the decibel scale. Because this scale is based on power (energy) ratios, there must be an assumption of constant impedance in the transmission medium, when expressing pressure ratios, and the pressures must be squared, or the logarithm of the pressure ratio multiplied by 2, to convert to an energy ratio. In air at 20 degrees Celsius and standard pressure, 1dyne/square centimetre is equivalent to a power or intensity of:

2.41 x 10-4 watts/square metre (i.e. about 1/4 of a milliwatt)

See acoustic intensity, and decibel.

E/E's

Experimenter/experimenters.

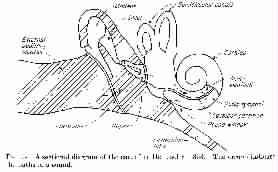

ear drum

The membrane dividing the outer ear (external auditory meatus) from the middle ear -- the air -- filled cavity containing the ossicles. The membrane has a shallow conical shape with the "handle" of the malleus ("hammer" or first ossicle) lying radially on the upper vertical radius, being hinged at the top edge. The bottom edge of the membrane has a more or less pronounced fold, which frees the lower edge and allows the ear drum to rotate the malleus about its hinge. As a result, the ear-drum acts as a piston in the sound transmission chain. Impedance matching with the air is achieved by the mechnical arrangements created by the ossicles and also by the construction of the basilar membrane/organ of Corti/tectorial membrane combination. Figures 5 and 9 provide two views, one more diagrammatic than the other, of the ear mechanisms. See also ossicles and tectorial membrane.

Sectional diagram of ear

(From Experiments in Hearing, by Georg von Békésy.

© 1960 McGraw-Hill Book Company. Used with permission of McGraw-Hill Book Company.)

effectors

Organs (devices) for operating on the environment of an organism (or machine).

efferent

Conducting outwards, towards the periphery. Thus efferent nerves are those which carry impulses from the central nervous system to the effectors (muscles and glands).

enclitic

See proclitic.

endolymph

Viscous fluid filling the cochlear partition (or duct). See cochlea

envelope

Roughly, the "shape" which contains components forming an object of interest. In mathematical terms, it is similar to the convex hull, though will usually involve concave portions as well. The real criterion is the amount of detail that the shape reveals. Thus the envelope of a narrow band spectral section would approximate a wide band spectral section. The envelope of a speech time-waveform would approximate the function obtained by joining all the positive peaks together and all the negative peaks, smoothing out any significant kinks. The form of an envelope function depends quite a lot upon the smoothing function applied to the original function.

ergonomics

From the Greek "ergon" work -- literally ergonomics means "the study of work", but is actually the British term for human factors engineering, which involves a study of the working conditions most suited to the many tasks an operator is asked to perform.

esophageal speech

see oesophageal speech.

eustachian tube

A narrow canal which connects the middle ear to the throat. It is normally closed in humans, except when swallowing or blowing. In some creatures, notably the frog, a very wide short eustachian tube acts to make the ear drum act like a noise-cancelling microphone for self-generated sounds, with obvious benefits.

external auditory meatus

See auditory meatus

FH2

The frequency of a filter used in a Parametric Artificial Talker to simulate the spectrum of sibilant sounds (fricatives -- such as those at the beginning of "sun" and "shun"), which are produced by forcing air through a narrow constriction located towards the front of the oral cavity.

Fx/Fo

The glottal vibration frequency or "larynx" frequemcy in speech, especially in synthetic speech. Fo is strictly the fundamental frequency of a repetitive time-waveform. The glottal vibration frequency is only quasi-periodic, but it is convenient to treat the glottal waveform as instantaneously periodic (sic) and talk about the fundamental frequency when analysing the frequency spectrum of speech. See fundamental frequency

F1/F2/F3

Formant 1/formant 2/formant 3. As speech synthesiser parameters these represent the frequencies of the resonant peaks in the output spectrum. There are higher formants (F4 ... Fn) but these are less important in making primary distinctions between speech sounds. See formant and articulatory synthesis.

falsetto

A mode of voicing (principally associated with male speech) in which an unnaturally high Fo is produced by setting up an abnormal mode of glottal vibration.

Fast Fourier Transform

A method devised by Cooley & Tukey (Mathematics of Computing 19, 297- 301, April 1965) for computing the coefficients of the discrete Fourier transform (the digital computer equivalent of the Fourier transform). The method is very much faster than previous algorithms and a considerable literature has developed on this special topic because of its importance in signal analysis, and the widespread use of computers in laboratories around the world. See also Linear Predictive Coding (LPC).

FFT

see Fast Fourier Transform.

filter

A transmission device of limited bandwidth.

foot

Unit division of speech according to the occurrence of stressed syllables ("beats"), rather as music is divided into bars. Each foot begins with a syllable bearing primary stress, and ends just before the following primary stress.

formant

A formant is a significant peak in the spectral envelope of the frequency spectrum characterising speech. In general, it varies as a function of frequency against time. The lowest three formants are considered adequate for good intelligibility, though in synthetic speech using a so-called "formant synthesiser" additional higher poles are required to compensate for the missing higher formants and the radiation impedance from the mouth. Formants appear as dark bands in a broad-band spectrogram. See articulatory synthesis.

formant synthesiser

A "terminal analogue" type of synthesiser which models speech in the acoustic domain by using filters to simulate the resonant behaviour of the vocal and nasal passages. Pulsed or random energy is fed into the input to simulate the glottal pulses and/or aspiration, and additional random energy, shaped by filters is added to simulate fricative energy. The intensities are controlled directly and the energy balances are not well represented. Moreover, only the lowest three or four formants are properly represented and varied. The resulting voice quality is less than natural, even discounting the difficulties of representing dynamic variation, timing and intonation accurately. A "terminal analogue" is an input-output representation of the vocal tract behaviour rather than a model of its distributed acoustic properties. It is contrasted with a transmission-line or acoustic tube model which directly models the acoustic properties of the vocal apparatus as a collection of tubes and energy balances. The author was involved in the development of a synthesis system of the latter type. The paper "Real time articulatory speech synthesis by rules" describes the system.

formant tracking

The process of determining the frequency position of formant peaks automatically, especially the first three formant peaks. It is not as easy as it might seem. Decision criteria to distinguish significant peaks from insignificant peaks have to be set, and will be somewhat arbitrary; formants may come so close together that they form a single peak; and in some sounds the formant 1 peak may be considerably reduced in amplitude, or split by a nasal anti-resonance. Furthermore, formant peak frequency values may change very quickly in just those places where accurate determination is most important (e.g. the transitions associated with a stop sound), yet one strategy for eliminating noise from a formant tracker output is to apply continuity criteria to the values obtained. Clearly it would be useful to have a mechanism capable of making a binary distinction between rapid and slow movement, adjusting the application of the continuity criteria appropriately. The problem is one of many. It is typical of the problems encountered in the automatic analysis of speech.

frequency analysis

An analysis of a time varying signal into its individual frequency components. Also known as Fourier analysis.

frequency spectrum

The total range of frequencies needed to contain all components of the Fourier analysis of a sound. The frequency domain description of a phenomenon is, loosely, called its (frequency) spectrum. See also harmonics.

Fourier analysis

The decompostion of a complex waveform into sinusoidal components, and determination of the coefficients (amplitudes) and phase of the various terms. This gives a Fourier series. Any physically realisable waveform can be represented to arbitrary accuracy. In dealing with non-repetitive waveforms there are problems. An impulse produces a uniform spectral density function in place of a Fourier series. For non-transient waveforms, a segment of the waveform is excised, and treated as repeated indefinitely. This arbitrarily selects the collection of sinusoids that underlies the transform and, in particular, implies a non-existent fundamental. In speech, which, strictly speaking, is non-repetitive, an excellent compromise is achieved by choosing the time-interval between successive glottal pulses as the unit for decomposition in this repeated segment fashion -- making the analysis so-called "pitch-synchronous". Spectrograms computed on a pitch-synchronous basis show considerably more coherence than those computed on the basis of arbitrary segments. Of-course, the method should be called glottal-pulse synchronous. See pitch and harmonics.

Fourier series

That which is produced by Fourier analysis.

Fourier transform

See Fourier analysis.

frequency

The repetition rate of a repeating event. The reciprocal of the time-interval between successive repetitions of the event, especially successive repetitions of a cyclic waveform.

frequency analysis

See Fourier analysis.

frequency domain

A description of a system or phenomenon based on frequency and phase measures. See time domain.

frequency spectrum

The range of frequencies allocated or considered or involved in dealing with frequency-related phenomena.

fricative

A speech sound produced by forcing air through a narrow constriction, thereby generating noise due to air turbulence which is characteristic of the constriction—so-called friction noise, and is somewhat shaped by any coupled cavities. If the vocal folds are maintained in an open position during speech articulation, so that they do not vibrate and there is no voicing, then with constriction higher up in the vocal tract (say between tongue and palate) an unvoiced fricative results. The acoustic correlates of an unvoiced fricative are: the offset and onset of voicing; early disappearance and late reappearance of F1; formant transitions; and a spectral energy distribution appropriate to the particular place of articulation. If the vocal folds vibrate, then a voiced fricative results. The friction energy of a voiced fricative is usually somewhat modulated in amplitude at the voicing frequency. During the constrictive part of a voiced fricative there is a drop in the pitch frequency (one form of micro-intonation) due to the supraglottal pressure increase caused by the vocal tract constriction. See also voiced stop.

fundamental frequency

The lowest component frequency of a periodic waveform. See Fourier analysis.

glottal flap

Excitation of the vocal tract by what are effectively isolated glottal pulses. This happens typically at the ends of utterances by some male speakers, as the voicing frequency (pitch) is allowed to fall dramatically. It is similar to creaky voice, which occurs at the beginning as well as the end of utterances for many speakers of educated Southern British English ("RP" from "Received Prunciation", the accent formerly expected of professional radio announcers and journalists in Britain). In both, the glottal rate reaches abnormally low values, and the pulses are so well separated that they are easily seen as separate in a spectrogram.

glottal pulse

The result of one cycle, opening and closing, of the glottis during voiced speech. One cycle of the glottal waveform

glottal rate/frequency

The frequency of vibration of the vocal folds that surround the glottis. Normally it is not constant, even for two successive cycles.

glottal waveform

The volume velocity waveform of air passing through the vocal folds (glottis) during voiced speech. For normal speech, it approximates a triangular waveform at normal voicing effort. See harmonics.

glottis

The opening that varies from nothing, through a slit of increasing width, up to a wide open triangle formed between the vocal folds. When the tension and position of the vocal folds is suitably adjusted, and air pressure applied from the lungs below, fairly regular puffs of air break through the lips of the vocal folds and provide so-called "voiced" excitation of the resonant cavities of the vocal apparatus. The vocal folds are located in the larynx.

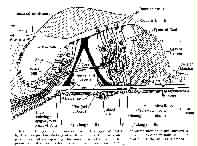

hair cells

Cells lying in the organ of Corti which provide mechanical-to-electrical conversion of sound vibrations as rendered at the tectorial membrane, and hence generate electrical activity in the auditory neurons (from which arise the fibres of the VIIIth cranial nerve, which also innervates the vestibular apparatus). Each cell has small hair-like processes which are mechanically stimulated by lateral movements of the tectorial membrane, with respect to the organ of Corti. These movements result from the displacement of the basilar membrane caused by pressure waves transmitted into the cochlea. It is of interest that the neurons of the auditory nerve, unlike most others, do not regenerate following injury to the processes, but generally die. The hair cells innervated by such a neuron then also die. It was shown in 1988 that the equivalent cells in chickens regenerate after damage (Science, June 1988, Corwin, University of Hawaii and Cotanche, Boston University). This holds out hope that, one day, it may be possible to help or even cure humans with noise-induced hearing loss. See Figure 4 and Figure 10.

half octave band spectrum

See octave band spectrum.

hard palate

The roof of the mouth (oral) cavity, which divides it from the nasal cavity. It lies between the velum and the teeth. See alveolar ridge.

harmonics

When a note is played on a musical instrument, a sound is produced having a certain pitch, with overtones. The pitch is closely related to the fundamental frequency of vibration of the mechanism producing the note, while the overtones, comprising some selection of frequencies that are integer multiples of the fundamental, give the instrument its characteristic timbre. Frequencies falling at integer multiples of the fundamental frequency are called harmonics and a Fourier analysis of a repetitive waveform effectively decomposes a complex waveform into the fundamental and its harmonics. A triangular waveform (symmetrical linear rise and fall during the first and second half cycles respectively) contains all odd harmonics. So does a square wave (being a differentiated triangular wave, this is a logical consequence). A triangular wave is a special case. Any waveform that has a rise and a fall in two linear sections comprising a full cycle will contain all harmonics except those that exactly divide both rise and fall periods an integer number of times (not necessarily the same for the two sections). The glottal waveform is slightly assymetric and therefore contains most harmonics in the speech frequency range. Missing or reduced harmonics can affect the output spectrum dramatically when convoluted with the filter function of the vocal tract. The characteristic sound quality of a church bell results from the rather strange distribution of frequencies it produces which are by no means all harmonically related. A tubular bell, which rings with a normal set of harmonics produces a much less interesting sound than a church bell.

Haskins Laboratory

One of the two or three most important speech research laboratories in the world. Much of the original work on elucidating speech cues was carried out there, and some of the first serious synthetic speech was produced there in the 1950s using a spectrogram playback apparatus known as Pattern Playback. Frank Cooper, Pierre Delattre, Alvin Liberman, Leigh Lisker and many others laid the ground-work for speech synthesis by rules. Their current research work is now available on their website, replacing their well-known annual reports.

hearing loss

A shift in hearing sensation level and thus defined as:

HL = 10 log (I/Io) db

where I is the intensity (sound energy) at the threshold of hearing for the patient, and Io is the intensity at the threshold of hearing for normal persons (i.e. a statistical average over normal subjects).

helicotrema

The sole connection between the scala vestibuli and the scala tympani, the two spaces either side of the cochlear partition. The helicotrema allows volume displacement of the fluid in the scala vestibuli by the oval window movements caused by the stapes to be transmitted into the scala tympani, ultimately causing volume displacement of the round window. This streaming of the fluid, induced by pressure waves on the ear drum, produces maximum pressure differences across the cochlear partition, and hence distortion of the basilar membrane, at places corresponding to frequency of excitation (for sounds in the normal hearing range). As a result, nerve pulses are generated in different fibres corresponding to different frequencies. The pulses themselves are also grouped to reflect the frequency and phase of the sound. The highest frequencies produce displacements of the basilar membrane near the windows, the lowest near the helicotrema, the range being about 20 khz down to 20 hz. See basilar membrane, volley theory.

Hensen's cells

Cells forming part of the organ of Corti and capable of generating steady voltage potentials.

herz

The unit for measuring repetition rate of a repeating event, especially a regularly cyclic event. See cycles per second.

Hidden Markov Model

A technique for recognising speech based on a specialised state machine in which the states represent the varying spectrum of the speech signal, classified into discrete categories, and linked by transitions. Different segments of speech drive the HMM through different paths. The path allows the segment or succession of segments and therefore the speech input to be identified. There is a vast literature on the topic, as the algorithms for creating, searching, backtracking and so-on within the network, are very important to economy and success. See also segment synchronisation

high back vowel

See vowel.

high front vowel

See vowel.

HMM

See Hidden Markov Model.

hz

An abbreviation for herz.

IEEE-ASSP

Institute of Electrical & Electronics Engineers Transactions on Acoustics, Speech and Signal Processing. An important source of current research with an emphasis on electronics and signal processing. Published by the Institute of Electrical & Electronics Engineers.

IJMMS/IJHCS

International Journal of Man-Machine Studies, now renamed the International Journal of Human-Computer Studies. An important source of current research with emphasis on all aspects of systems involving Human-Computer Interaction (HCI, also known as Computer-Human Interaction -- CHI). Published by Academic Press.

incus

See ossicles.

inferior colliculus

See auditory pathways.

information

Information is the subject of Claude Shannon's original theories on the transmission of information (Bell System Technical Journal volume 27 1948: A mathematical theory of communication, pages 379–423 and 623–656). Information is measured as the logarithm of the probability of a message. In the case of logarithms to the base "2", the measure becomes a bit for binary digit. Only when there is no noise, and the possibilities are equally likely, can a physical "1" or "0" convey one bit of information. The problems of coding to deal with noise, or unequal message probabilities, are the province of information theory and coding theory. See redundancy.

information theory

See information and redundancy.

inner ear

The cochlea and all it contains.

intelligibility

A sound may or may not be heard. If it is heard, it is audible. To be intelligible, it must be a speech signal, and the listener must recognise the nonsense syllable, word, sentence, or whatever that was sent to him. The threshold of intelligibility is some 10 to 15 decibels higher than the threshold of audibility for the same speech under the same noise conditions (Hawkins & Stevens 1950). Tests of intelligibility are used to evaluate communication systems and situations. See articulation index.

intensity

See acoustic intensity

intensity spectrum level

Is defined as the acoustic intensity per herz for the noise in question. If the band of frequency containing the noise is DF hz wide, then:

ISL = 10 log(I/(Io.DF)) db re. Io = IL - 10 log DF

where IL is the sound intensity level. See also pressure spectrum level, acoustic intensity, sound intensity level, sound pressure level and decibel.

interval scale

See scales of measurement.

intonation

Often referred to as the "tune" of an utterance. The intonation pattern of an utterance is the time-pattern of pitch-variation during an utterance and serves several purposes at different levels. At the segmental level, so-called "micro-intonation" provides cues to constrictive postures (contoids) of the vocal apparatus because the voice pitch (glottal frequency) rises and falls as the pressure difference across the glottis varies as a result of the changes in supraglottal airflow caused by the varying constrictions. At the prosodic (suprasegmental) level, the intonation pattern gives clues to syllable, word, and sentence structure. At the semantic level intonation affects meaning. It should not be thought that intonation achieves its effect in isolation, however, even though it would have a considerable effect under such conditions. Of considerable importance is the precise relationship between the pitch movements and levels that make up the intonation pattern, and the segmental features, rhythm cues, and perceived loudness effects that run in parallel. In tone languages (such as Chinese), intonation also directly affects the meanings of words, since words with different meanings may differ only in the tone applied. See stress, prosody, salient and prominence.

IPO APR

The Institut voor Perceptie Onderzoek, part of the Technical University of Eindhoven in the Netherlands. The IPO works on speech perception research, and computer-human interaction amongst other topics. They have carried out seminal work on English intonation.

ipsilateral

The same side.

isochrony/isochronicity

The theory of isochrony states that different rhythmic units in speech (feet—Abercrombie/Halliday, rhythm units—Jassem) have a tendency to be of equal duration regardless of the number of segments or syllables they contain. Studies have shown that the ratio between the duration of the longest rhythmic unit to that of the shortest is as much as 6 or 7 to 1. Therefore American linguists (e.g. Lehiste) consider that most of the perceived isochrony effect is just that—a perception on the part of the listener, rather than an objective tendency towards equal duration. A statistical study by the present author, with Jassem and Witten, showed that the tendency towards isochrony was one of the only three independent factors determining speech rhythm. In setting the duration of segments, when modelling speech rhythm, the identity of segment accounted for about 45% of the variance in duration; whether the rhythmic unit was marked (tonic or final) accounted for about 15% of the variance in duration; and the segment durations then needed to be corrected by an inverse linear regression based on the number of segments in the rhythmic unit, accounting for about 10% of the variance in duration. The effect was almost non-existent for proclitic syllables (anacruses in Jassem's terminology), and Jassem's theory therefore excludes anacruses in estimating rhythmic unit duration for purposes of testing and elaborating the theory. See salient, foot, rhythmic units and anacrusis.

IWR

Isolated Word Recognition. See automatic speech recognition.

JASA

The Journal of the Acoustical Society of America. A basic source of important work in speech, psycho-acoustics, and other topics. Published by the American Physical Society and extremely well indexed.

JSHR

The Journal of Speech and Hearing Research. Published by the American Speech and Hearing Association.

JVLVB

The Journal of Verbal Learning and Verbal Behaviour. Published by Academic Press.

juncture

The difference between the utterances "night rate" and "nitrate" is one of "juncture"—where does the word boundary fall. Juncture is important. Thus the affricates in spoken English might be regarded simply as two successive speech postures in a row: but, for reasons of juncture and for reasons of distributional characteristics they are treated as distinct single phonemes. For example, "my chip" has the word boundary before the combined sound of "t" followed by "sh", whilst in "might ship", the juncture is in the middle. The combined "t-sh" sound is one of the English affricates. Although the dynamic acoustic elements are spectrally quite similar to the related simpler phonemes, the timing of the elements is significantly different, and signals the position of the word boundary.

Kay Sonagraf

See sound spectrograph.

kinaesthetic sense

A sense of the spatial relation and movement of its own frame possessed by a conscious entity. See proprioception.

labial

To do with a lip, lip-like part, or labium.

L & S

Language & Speech. A useful reference. Holme's early work on speech-synthesis-by-rules was published in this journal. Published by Robert Draper, Teddington, UK.

laryngograph

A device for determining the voicing frequency (glottal rate/frequency) with which a live talker is producing speech. Direct measurement of the frequency of vibration of the vocal folds may be determined on a pulse to pulse basis in a variety of ways involving electrical impedance, light beam modulation and so on. The original laryngograph worked by placing non-invasive electrodes either side of the speaker's larynx (on the neck) and measuring the high frequency impedance (impedance to a signal at approximately 1Mhz). Although the change in impedance as the vocal folds open and close is quite small, it is possible to pick up the varying contact of the folds, and convert successive intervals to a display of glottal pulses. Ideally some decision criteria as to presence versus absence of voicing should be built in to suppress indications during periods deemed voiceless. Setting up an adequate test of voicing versus no voicing is not as easy as it might seem.

larynx

A cartiligenous upper chamber of the trachea (or wind-pipe) that may be felt from outside the throat as the Adam's apple. The larynx contains the glottis which is bounded by the vocal folds. Enlargement of the larynx, and hence lengthening of the vocal folds, is a secondary sex characteristic for male humans, and is responsible for the voice "breaking" at puberty, and for the lower pitch of the mature male human voice.

lateral

To or toward the side: away from the mid-line. Thus a lateral consonant is produced by constriction allowing airflow either side of the tongue.

lateral lemniscate and nucleus

See auditory pathways.

limen

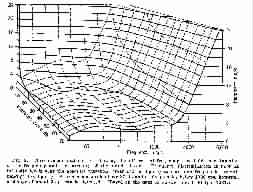

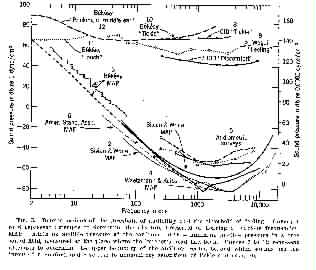

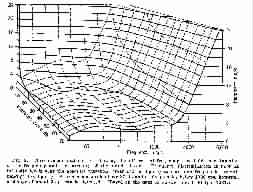

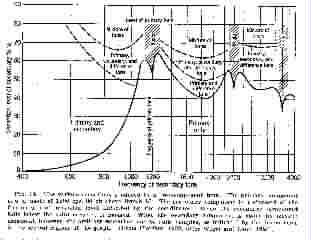

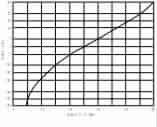

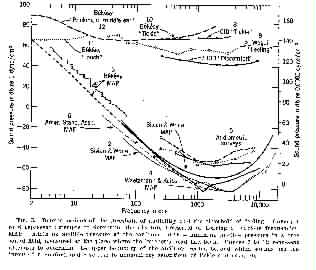

Threshold. An absolute threshold marks the boundary between what is just below the level of sensation and what is just above. A differential threshold marks the boundary between a difference in sensation which is just below detection, and a difference which is just detectable. The threshold of feeling or sensation marks the boundary below which feeling or sensation does not occur and above which it does. Thresholds represent statistical results from defined groups of subjects (usually subjects with normal sensory abilities). Individual differences are important and any particular individual may differ significantly from the statistical averages. Figures 6 and 8 illustrate absolutes thresholds of hearing, and differential frequency thresholds at various stimulus frequencies.

Thresholds of audibility and of feeling (various sources)

(From Handbook of Experimental Psychology, edited by S.S. Stevens.

© 1951 John Wiley & Sons. Used with permission of John Wiley & Sons)

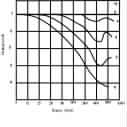

Differential frequency threshold against frequency & intensity of the standard tone